Of all current technological innovations, artificial intelligence has undoubtedly generated the most hype, and the most fear. Wherever AI goes, the image of a dystopian future is never far away, nor is a conference room full of nervous lawyers.

The reality, of course, is quite different. Some corporate legal teams, particularly those already in the tech field, or those at companies handling huge amounts of customer data are ahead of the curve. But, for many in the legal world, the future is a far-off land – for now.

‘When you hear people saying that tomorrow everyone will be replaced by robots, and we will be able to have a full, sophisticated conversation with an artificial agent… that’s not serious,’ explains Christophe Roquilly, Dean for Faculty and Research, EDHEC Business School.

‘What is serious is the ability to replace standard analysis and decisions with robots. Analysis further than that, when there is more room for subjectivity and when the exchange between different persons is key in the situation… we are not there yet.’

Much of the conversation around AI, particularly in the in-house world, remains a conversation about potential. The expanding legal tech sector is teeming with AI-based applications in development, but on the ground, even in the legal departments of many of Europe’s biggest blue chips, concrete application appears to be limited.

‘I think the US may be a bit further down the road than Europe. AI is coming, people are thinking about it, some in a partial way, but it will be developing quickly in the coming years. It may have more or less the same role as the internet had 15 years ago changing radically the way we work, but we’re not there yet,’ says Vincent Martinaud, counsel and legal manager at IBM France.

‘While the legal function may change to the extent that there are tasks we may not do anymore in the future, does that mean we will be disrupted? I don’t think so.’

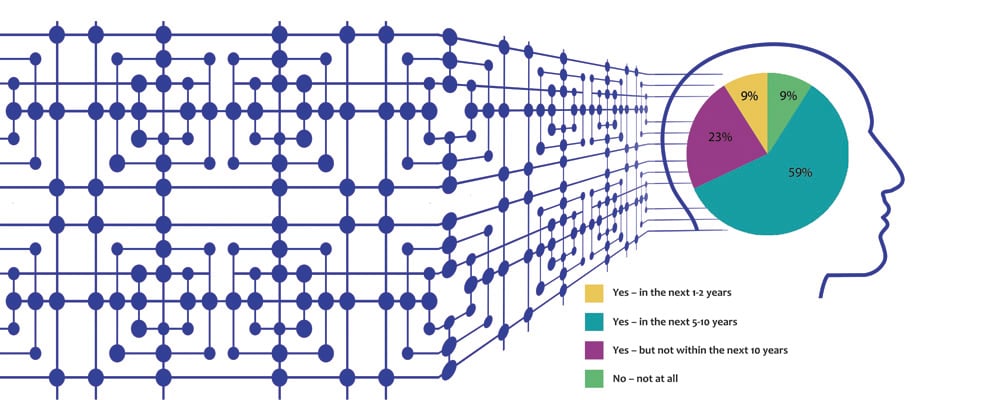

Those views largely align with the general counsel surveyed across Europe on AI.

Only 9% of those surveyed anticipate AI becoming a disruptor in the legal industry within the next two years. 59% of those surveyed expected AI to be a disruptor within the next five to ten years, with the remaining 32% saying it would not be a disruptor within the next decade.

Understanding Argument – Professor Katie Atkinson, head of the department of computer science at Liverpool University

‘You can model argumentation in all sorts of different specific domains, but it’s particularly well suited to law because arguments are presented as part of legal reasoning.

It could be a computer arguing with a human, or it could be two machines arguing autonomously. But it’s basically looking at how arguments are used in order to justify a particular decision and not another alternative. We do that through constructing “formal models”, so you can write algorithms that can then decide which sets of arguments it is acceptable to believe together. That provides you with automated reasoning and, ultimately, a decision about what to do and why.</lip

It’s not necessarily trying to replicate what humans do, it’s more trying to take inspiration from how humans argue and then get an idealised version of that. There’s literature going back to the time of the ancient Greek philosophers on how people argue, so we take a lot of inspiration from that work, and also from legal theories – we look specifically at how legal argumentation is conducted.

What you’re trying to do is come up with a model that can be applicable over multiple situations and then you just feed in the specific facts of specific cases into those models to determine the decision in that case.

Looking at an idealised form of argumentation doesn’t necessarily mean that we can’t consider nuances, preferences and subjective information – all of which can also be modelled.

My own particular research has looked at how we can build these models of argument and then test them using sets of legal cases that are well known in the published literature on AI and law. Over three domains we got a 96% success rate in replicating the human judges’ decision and reasoning, and we’ve published work on that evaluation exercise.’

While the legal industry may represent a potentially lucrative market for software developers, it does not offer the potential gains of sectors like healthcare or finance.

‘In AI, we see many developments with our cars, many developments in the healthcare sector, and therefore changes in these sectors will probably come faster. In the legal field we do see changes, but I don’t think we are at the edge of it,’ notes Martinaud.

With relatively few lawyers utilising AI solutions at present (8.8% of survey respondents reported using AI solutions – with all applications being in low-level work), perhaps it’s time for a reality check.

Applications

For many in-house counsel, the chat bot is the most readily available example of how AI (in a first-stage form) can make life easier for in-house teams. At IBM, Martinaud is using Watson, the company’s deep learning AI for business. Through this platform, the legal team uses, for instance, a chat bot called Sherlock, which can field questions regarding IBM itself – its structure, address, share capital, for example. The team is also working on a bot to answer the myriad queries generated by the business on the topic of GDPR, and on a full data privacy adviser tool which can receive and answer questions in natural language.

‘These are the kinds of questions you receive when you’re in the legal department, and it’s very useful to have these tools to answer them or to ask the business to use the tool instead of asking you,’ says Martinaud.

‘An additional example of Watson-based technology that the legal team has started using is Watson Workspace, a team collaboration tool that annotates, groups the team’s conversations, and proposes a summary of key actions and questions, which is organised and prioritised.’

Another early use-case for AI in the legal profession has been the range of tools aiming to improve transparency in billing arrangements.

Last year, IBM stepped into the mix with Outside Counsel Insights, an AI-based invoice review tool aimed at analysing and controlling outside counsel spend. A product like Ping – an automated timekeeping application for lawyers – uses machine learning to track lawyers as they work, then analyses that data, turning it into a record of billable hours. While aimed at law firms, its applications have already proved more far-reaching.

Daniel Saunders, chief executive of L Marks, an investment and advisory firm that specialises in applied corporate innovation, says the technology is a useful means of monitoring service providers’ performance.

‘As someone who frequently is supported by contract lawyers et al., increasing the transparency between the firms and the clients is essential. Clients have no issue paying for legal work performed, but now we want to accurately see how this work translates to billable hours,’ he says.

‘Furthermore, once law firms implement technologies like Ping, the data that is gathered is going to be hugely beneficial in shaping how lawyers will be working in the future.’

Information management

The clear frontrunner in terms of AI-generated excitement among the corporate legal departments who participated in the research for this report was information management, which can be particularly assisted by developments such as smart contracting tools.

‘Legal documents contain tremendous knowledge about the company, its business and risk profiles. This is valuable big data that is not yet fully explored and utilised. It will be interesting to see what AI can do to create value from this pool of big data,’ says Martina Seidl, general counsel, Central Europe at Fujitsu.

Europe’s race for global AI authority

Artificial Intelligence (AI) is on course to transform the world as we know it today. A 2017 study by PwC calculated that global GDP will be 14% higher in 2030 as a result of AI adoption, contributing an additional €13.8tn to the global economy. The same study states that the largest economic gains from AI will be in China (with a 26% boost to GDP in 2030) and North America (with a 14.5% boost) and will account for almost 70% of the global economic impact. In addition, International Data Company (IDC), a global market intelligence firm, predicts that worldwide spending on AI will reach €16.8bn this year – an increase of 54.2% over the prior 12-month period.

The runners in the AI race are, unsurprisingly, China and the United States, but Europe has pledged not to be left behind. The European Commission has agreed to increase the EU’s investment in AI by 70% to €1.5m by 2020.

Closing the gap

The European Commission has created several other publicly and privately funded initiatives to tighten the field:

Horizon 2020 project. A research and innovation programme with approximately €80bn in public funding available over a seven-year period (2014 to 2020).

Digital Europe Investment Programme will provide approximately €9.2bn over the duration of the EU’s next Multinational Financial Framework (2021 – 2025). These funds will prioritise investment in several areas, including AI, cybersecurity and high-performance computing.

European Fund for Strategic Investments (EFSI). An initiative created by the European Investment Bank Group and the European Commission to help close the current investment gap in the EU. The initiative will provide at least €315bn to fund projects which focus on key areas of importance for the European economy, including research, development and innovation.

However, AI funding is not the only pursuit. While China has pledged billions to AI, it is the US that has generated and nurtured the research that makes today’s AI possible. In the research race, Europeans are striving to outpace the competition with the creation of pan-European organisations such as the Confederation of Laboratories for Artificial Intelligence in Europe (CLAIRE) and the European Lab for Learning and Intelligent Systems (ELLIS). CLAIRE is a grassroots initiative of top European researchers and stakeholders who seek to strengthen European excellence in AI research and innovation, and ELLIS is the machine learning portion of the initiative.

One main hurdle stands in the way of Europe and its run to the finish: the EU’s General Data Protection Regulation (GDPR). GDPR regulates EU organisations that use or process personal data pertaining to anyone living in the EU, regardless of where the data processing takes place, at a time when global businesses are competing to develop and use AI technology. While GDPR forces organisations to take better care of personal client data, there will be repercussions for emerging technology development. It will, for example, make the use of AI more difficult and could possibly slow down the rapid pace of ongoing development.

Individual rights and winning the race

Europe has plotted its own course for the regulation and practical application of legal principles in the creation of AI. Ultimately, the advantages of basing decisions on mathematical calculations include the ability to make objective, informed decisions, but relying too heavily on AI can also be a threat, perpetrating discrimination and restricting civilians’ rights. Although a combination of policy makers, academics, private companies and even civilians are required to implement and maintain ethics standards, continue to question AI’s evolution and, most importantly, seek education in and awareness of AI, it will be a coordinated strategy that will make ethics in AI most successful.

For Nina Barakzai, general counsel for data protection at Unilever, AI-powered contracting has proved to be a real boon in understanding the efficacy of privacy controls along the supply chain.

‘Our combined aim is to make our operations more efficient and reduce the number of controls that are duplicated. Duplication doesn’t help those who are already fully loaded with tasks and activities. Where there is confusion, it should be easy to find the answer to fix the problem,’ she says.

‘That’s where AI contracting really comes into play, because you analyse how you’re doing, where your activity is robust and where it isn’t. It’s a kind of issue spotting – not because it’s a problem but because it could be done differently.’

At real estate asset management company PGIM Real Estate, the focus of AI-related efforts has been to build greater efficiency into the review of leases, to enable a more streamlined approach to due diligence. The legal team approached a Berlin-based start-up that had developed a machine-learning platform for lease review.

‘Since we do not want the machine to do the entire due diligence, we use a mixed model of the software tool reviewing documentation and then, for certain key leases which are important from a business perspective, we have real lawyers looking at the documentation as well,’ says Matthias Meckert, head of legal at PGIM.

‘It’s a lot of data gathering and a machine can do those things much better in many situations: much more exact, quicker of course, and they don’t get tired. The machine could do some basic cross checks and review whether there are strange provisions in the leases as well.’

Similarly, transport infrastructure developer Cintra has looked to the machine-learning sphere for the review and analysis of NDAs, and is almost ready to launch such a tool in the legal department after a period of testing is completed.

‘People always find the same dangers in that kind of contract, so it’s routine work. It can be done by a very junior lawyer – once you explain to that lawyer what the issues are, normally it’s something that can be done really quickly,’ says Cristina Álvarez Fernández, Cintra’s head of legal for Europe.

Far from being a narrative about loss of control, AI in the in-house context is as much a story of its limitations as its gains.

Do you think that AI will be a disruptor in the legal industry?

‘If your own process is not really working right, if you don’t have a clear view on what you’re doing and what are the steps in between, using a technology resource doesn’t really help you. What do I expect, what are the key items I would like to seek? You need to teach the legal tech providers what you would like to have – it’s not like somebody coming into your office with a computer and solving all your problems – that’s not how it happens!’ says Meckert.

When introducing AI solutions – or any technology for that matter – having a realistic understanding of what is and isn’t possible, as well as applying a thoughtful and strategic approach to deployment is critical to gaining buy-in and maximising potential.

‘The risk is of treating new software capability as a new shiny box: “I’ll put all my data into the box and see if it works”. My sense is that AI is great for helping to do things in the way that you want to do them,’ says Barakzai.

Emily Foges, CEO, Luminance

‘The decision to apply our technology to the legal profession came out of discussions with a leading UK law firm, and the idea of using artificial intelligence to support them in document review work was really the genesis of Luminance, which was a great learning experience. For example, we had to teach Luminance to ignore things like stamps – when you have a “confidential” stamp on a document, to begin with, it would try and read the word “confidential” as if it was part of the sentence.

We launched in September 2016, targeting M&A due diligence as an initial use-case: an area of legal work in pressing need of a technological solution. Since then, Luminance has expanded its platform to cater to a range of different use-cases. The key to Luminance is the core technology’s flexibility, making it easily adaptable to helping in-house counsel stay compliant, assisting litigators with investigations, and more.

When a company’s documents are uploaded to Luminance, it reads and understands what is in them, presenting back a highly intuitive, visual overview that can be viewed by geography, language, clause-type, jurisdiction and so on, according to the user’s preferences. The real power of the technology is its ability to read an entire document set in an instant, compare the documents to each other to identify deviations and similarities, then through interaction with the lawyer, work out what those similarities and differences mean.

I view the efficiency savings of Luminance as a side benefit. As a GC, you want to have control over all your documents – know what they say, know where they are, know how to find them, know what needs to be changed when a regulation comes along, and have confidence that you have not overlooked anything. I think the real change we are seeing with the adoption of AI technology by in-house counsel is that lawyers are able to spend much more time being lawyers and much less time on repetitive drudge work. They are freed to spend time providing value-added analysis to clients, supported by the technology’s unparalleled insights.’

‘If you’ve got your organisational structures right, you can put your learning base into the AI to give it the right start point. My realism is that you mustn’t be unfair to the AI tool. You must give it clear information for its learning and make sure you know what you’re giving it – because otherwise, you’ve handed over control.’

Tobiasz Adam Kowalczyk, head of legal and public policy at Volkswagen Poznan, adds: ‘Although we use new tools and devices, supported by AI technologies, we often do so in a way that merely replaces the old functionality without truly embracing the power of technology in a bid to become industry leaders and to improve our professional lives.’

What’s in the black box?

Professor Katie Atkinson is dean of the School of Electrical Engineering, Electronics and Computer Science at the University of Liverpool. She has worked closely with legal provider Riverview Law, which was acquired by EY in 2018, and partners with a number of law firms in developing practical applications for her work on computational models of argument. This topic falls within the field of artificial intelligence and seeks to understand how people argue in order to justify decision-making. Her argumentation models are tested by feeding in information from published legal cases to see if the models produced the same answer as the original humans.

For Atkinson, a key aspect of the work is that it does not fall prey to the ‘black box’ problem common to some AI systems.

‘There’s lots of talk at the moment in AI about the issue of an algorithm just spitting out an answer and not having a justification. But using these explicit models of argument means you get the full justification for the reasons why the computer came up with a particular decision,’ she says.

‘When we did our evaluation exercise, we could also see how and why it differed to the actual case, and then go back and study the reasons for that.’

From a practical perspective, engendering trust in any intelligent system is fundamental to achieving culture change, especially when tackling the suspicious legal mind, trained to seek out the grey areas less computable by a machine. But Atkinson also sees transparency as an ethical issue.

‘You need to be absolutely sure that the systems have been tested and the reasoning is all available for humans to inspect. The results of academic studies are open to scrutiny and are peer reviewed, which is important,’ she says.

‘But you also need to make sure that the academics get their state-of-the-art techniques out into the real world and deployed on real problems – and that’s where the commercial sector comes in, whereas academics often start with hypothetical problems. I think as long as there’s joined-up thinking between those communities then that’s the way to try and get the best of both.’

British legal AI firm Luminance also finds its roots in academia. A group of Cambridge researchers applied concepts such as computer vision – a technique typically used in gaming – in addition to machine learning, to help a computer perceive, read and understand language in the way that a human does, as well as learn from interactions with humans.

CEO Emily Foges has found that users of the platform have greater trust in its results when it creates enough visibility into its workings that they feel a sense of ownership over the work.

‘In exactly the same way as when you appoint an accountant, you expect that accountant to use Excel. You don’t believe that Excel is doing the work, but you wouldn’t expect the accountant not to use Excel. This is not technology that replaces the lawyer, it’s technology that augments and supercharges the lawyer,’ she says.

‘That means that the lawyer has more understanding and more visibility over the work they’re doing than they would do if they were doing it manually. They are still the ones making the decisions; you can’t take the lawyer out of the process. The liability absolutely stays with the lawyer – the technology doesn’t take on any liability at all – it doesn’t have to because it’s not taking away any control.’

This understanding of AI as a tool as opposed to a worker in its own right was essential to framing the measured response that many of our surveyed general counsel took to the revolutionary potential of AI.

‘Depending on the role of AI, it can be an asset or a liability. However, AI will prove useless to make calls or decisions on specific cases or issues. It can, however, facilitate the decision-making process,’ says Olivier Kodjo, general counsel at ENGIE Solar.

A balancing act

The increasing prevalence of algorithmic decisions has caught the attention of regulators. A set of EU guidelines on AI ethics is expected by the end of 2018, although there is considerable debate among lawyers about the applicability of existing regulations to AI.

For example, the EU General Data Protection Regulation (GDPR) addresses the topic of meaningful explanation in solely automated decisions based on personal data. Article 22 (1) of the GDPR contains the provision that:

‘The data subject shall have the right not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly affects him or her’.

This mandates the presence of human intervention (not merely processing) in decisions that have a legal or significant effect on a person, such as decisions about credit or employment.

The UK has established an AI Council and a Government Office for Artificial Intelligence, and a 2018 House of Lords Select Committee report, AI in the UK: Ready, Willing and Able? recommended the preparation of guidance and an agreement on standards to be adopted by developers. In addition, the report recommends a cross-sectoral ethical code for AI for both public and private sector organisations be drawn up ‘with a degree of urgency’, which ‘could provide the basis for statutory regulation, if and when this is determined to be necessary.’

Europe’s Race for Global AI Authority

Artificial Intelligence (AI) is on course to transform the world as we know it today. A 2017 study by PwC calculated that global GDP will be 14% higher in 2030 as a result of AI adoption, contributing an additional €13.8tn to the global economy. The same study states that the largest economic gains from AI will be in China (with a 26% boost to GDP in 2030) and North America (with a 14.5% boost) and will account for almost 70% of the global economic impact. In addition, International Data Company (IDC), a global market intelligence firm, predicts that worldwide spending on AI will reach €16.8bn this year – an increase of 54.2% over the prior 12-month period.

The runners in the AI race are, unsurprisingly, China and the United States, but Europe has pledged not to be left behind. The European Commission has agreed to increase the EU’s investment in AI by 70% to €1.5m by 2020.

Closing the Gap

The European Commission has created several other publicly and privately funded initiatives to tighten the field:

- Horizon 2020 project. A research and innovation programme with approximately €80bn in public funding available over a seven-year period (2014 to 2020).

- Digital Europe Investment Programme will provide approximately €9.2bn over the duration of the EU’s next Multinational Financial Framework (2021 – 2025). These funds will prioritise investment in several areas, including AI, cybersecurity and high-performance computing.

- European Fund for Strategic Investments (EFSI). An initiative created by the European Investment Bank Group and the European Commission to help close the current investment gap in the EU. The initiative will provide at least €315bn to fund projects which focus on key areas of importance for the European economy, including research, development and innovation.

However, AI funding is not the only pursuit. While China has pledged billions to AI, it is the US that has generated and nurtured the research that makes today’s AI possible. In the research race, Europeans are striving to outpace the competition with the creation of pan-European organisations such as the Confederation of Laboratories for Artificial Intelligence in Europe (CLAIRE) and the European Lab for Learning and Intelligent Systems (ELLIS). CLAIRE is a grassroots initiative of top European researchers and stakeholders who seek to strengthen European excellence in AI research and innovation, and ELLIS is the machine learning portion of the initiative.

One main hurdle stands in the way of Europe and its run to the finish: the EU’s General Data Protection Regulation (GDPR). GDPR regulates EU organisations that use or process personal data pertaining to anyone living in the EU, regardless of where the data processing takes place, at a time when global businesses are competing to develop and use AI technology. While GDPR forces organisations to take better care of personal client data, there will be repercussions for emerging technology development. It will, for example, make the use of AI more difficult and could possibly slow down the rapid pace of ongoing development.

Individual Rights and Winning the Race

Europe has plotted its own course for the regulation and practical application of legal principles in the creation of AI. Ultimately, the advantages of basing decisions on mathematical calculations include the ability to make objective, informed decisions, but relying too heavily on AI can also be a threat, perpetrating discrimination and restricting civilians’ rights. Although a combination of policy makers, academics, private companies and even civilians are required to implement and maintain ethics standards, continue to question AI’s evolution and, most importantly, seek education in and awareness of AI, it will be a coordinated strategy that will make ethics in AI most successful.

‘From my end, it is the same old battle that we have experienced in all e-commerce and IT-related issues for decades now: the EC does not have a strategy and deliberately switches between goals,’ says Axel Anderl, parter at Austrian law firm Dorda. ‘For ages one could read in e-commerce related directives that this piece of law is to enable new technology and to close the gap with the US. However, the content of the law most times achieved the opposite – namely over-strengthening consumer rights and thus hindering further development. This leads to not only losing out to the US, but also being left behind by China.’

Anderl speaks to an uncomfortable reality nestled in among the ethics portion of the AI debate. While some remain concerned about the ethical questions posed by AI technology, this concern may not be shared by everyone. However, like most things, those who feel unrestrained by codes of ethics will be at a natural advantage in the AI arms race.

‘We are aware that if other, non-European global actors do not follow the fundamental rights or privacy regulations when developing AI, due to viewing AI from a “control” or “profit” point of view, they might get further ahead in the technology than European actors keen on upholding such rights and freedoms,’ explains a spokesperson from Norwegian law firm Simonsen Vogt Wiig AS.

‘If certain actors take shortcuts in order to get ahead, it will leave little time to create ethically intelligent AI for Europe.’

The ethics dimension also extends to the future treatment of employees. Despite headlines spelling doom for even white-collar professions, those we spoke to within in-house teams were reluctant to concede any headcount reduction due to investment in AI technology.

‘I don’t think this will replace entirely, at least so far, a person in our team other than in the future we would have a negotiation or similar, that we will cover that person with technology,’ says Álvarez Fernández, head of legal, Europe at Cintra.

‘I think this is going to help us to better allocate the resources that we have. I don’t think this will limit the human resource.’

And nor should it, says Atkinson:

‘You wouldn’t want to automate absolutely everything. One of the key aims with my work has been: let’s automate what we can, let’s try and improve consistency and efficiency,’ she says.

‘That ultimately helps the client and it frees up the people to do more of that people-facing work with their clients. People still want to speak to a human being on a variety of options and we still absolutely do need those checks from the humans on what the machines are producing.’

Whatever the future holds, the consensus for now seems to be that any true disruption remains firmly on the horizon – and although the potential is undeniably exciting, to claim that the robots are coming would be… artificial.